Novel-View Synthesis of Outdoor Sport Events Using an Adaptive View-Dependent Geometry

M. Germann, T. Popa, R. Keiser, R. Ziegler, M. GrossProceedings of Eurographics (Cagliari, Italy, May 13-18, 2012), Computer Graphics Forum, vol. 31, no. 2, pp. 325-333

Abstract

We propose a novel fully automatic method for novel-viewpoint synthesis. Our method robustly handles multi-camera setups featuring wide-baselines in an uncontrolled environment. In a first step, robust and sparse point correspondences are found based on an extension of the Daisy features~\cite{Tola09}. These correspondences together with back-projection errors are used to drive a novel adaptive coarse to fine reconstruction method, allowing to approximate detailed geometry while avoiding an extreme triangle count. To render the scene from arbitrary viewpoints we use a view-dependent blending of color information in combination with a view-dependent geometry morph. The view-dependent geometry compensates for misalignments caused by calibration errors. We demonstrate that our method works well under arbitrary lighting conditions with as little as two cameras featuring wide-baselines. The footage taken from real sports broadcast events contains fine geometric structures, which result in nice novel-viewpoint renderings despite of the low resolution in the images.

Overview

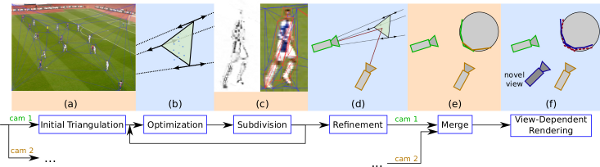

Our algorithm computes a 2.5D reconstruction for every camera as a base camera (Fig. 1 (a) -(d)). In a first step, an initial triangulation is set (Fig. 1 (a)). This is followed by iterations between depth optimization of the triangle vertices (Fig. 1 (b)) and subdivision steps. The depth optimization uses feature point matches from the base camera to other cameras and rough calibrations. In the subdivision, a back-projection error is evaluated to determine if a triangle could not be fit onto the geometry and needs to be subdivided. After a refinement of the depth values according to back-projection errors (Fig. 1 (d)), the 2.5D reconstructions of different base cameras are merged into a final 3D geometry.

Instead of using only projective textures with view-dependent blending, we propose a view-dependent geometry morph (Fig. 1 (f)). It is able to reduce texture missalignments caused by calibration errors. The 3D geometry morph is computed according to feature matches which are not on the epipolar lines of each other. These non-epipolar matches are used to determine the error of the calibration locally.

Results

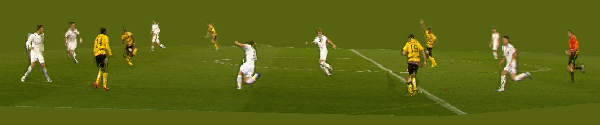

Our method is able to reconstruct the geomety in difficult outdoor setups with wide baselines, low resolution coverage, weak calibrations, and motion blur. In the paper and the video we show results that were generated with only two standard TV cameras as a source. Currently, there is no temporal coherence used. Nevertheless, we show results of sequences that demonstrate the ability to process many frames and only contain a minor flickering.

The view-dependent geometry significantly improves the result compared to standard projective texturing. The ghosting artifacts (duplication of object parts) are reduced to a minimum but the geometry and thus the rendered images still preserve the interpolation of the original camera views.

Downloads

[PDF] |  [BibTeX] |