Digital Character AI

Introduction

Digital characters are becoming increasingly popular in different domains such as entertainment, medicine, education, and sales. Our research aims at developing data-driven methods for establishing affect-aware conversational agents, speech synthesis, and animations to make interactions with digital characters more natural, engaging, and rewarding. Affect-awareness is at the core of all projects. By relying on affective states, interactions with digital characters can be tailored to the emotions of the user.

Topics

Digital Einstein Platform

ETH Zurich together with the ETH spin-off Animatico developed an interactive platform where the user can talk to a digital Albert Einstein character. Key technologies include natural-language processing and dynamic rendering of the individual's expressions and body language. The screen and comfy-looking armchair – the visible parts of the platform – are packed with technology. To help Einstein identify interlocutors and ask them questions, a small camera is used to scan people's movements and reactions. Whenever someone addresses Einstein, microphones filter their voice out of the ambient noise. Their words are then converted into text and analyzed by language-processing software. The program uses machine learning to determine what they wish to say and then employs a dialogue algorithm to choose the most appropriate answer along with expressions and gestures from a finite universe of possible reactions. The answers are part of a defined dialogue tree that encompass various topics, such as Einstein's famous theories, his time as a student and his personal friendships. An algorithm is responsible for adding a sense of randomness to the answers, so that the dialogue can keep taking new twists and turns. If, for example, the "Digital Einstein" notices that his partner in the dialogue has turned away, he will quickly ask whether they are distracted or bored. If he doesn't understand something, he will apologize and ask the person to repeat the question.

Affective Computing

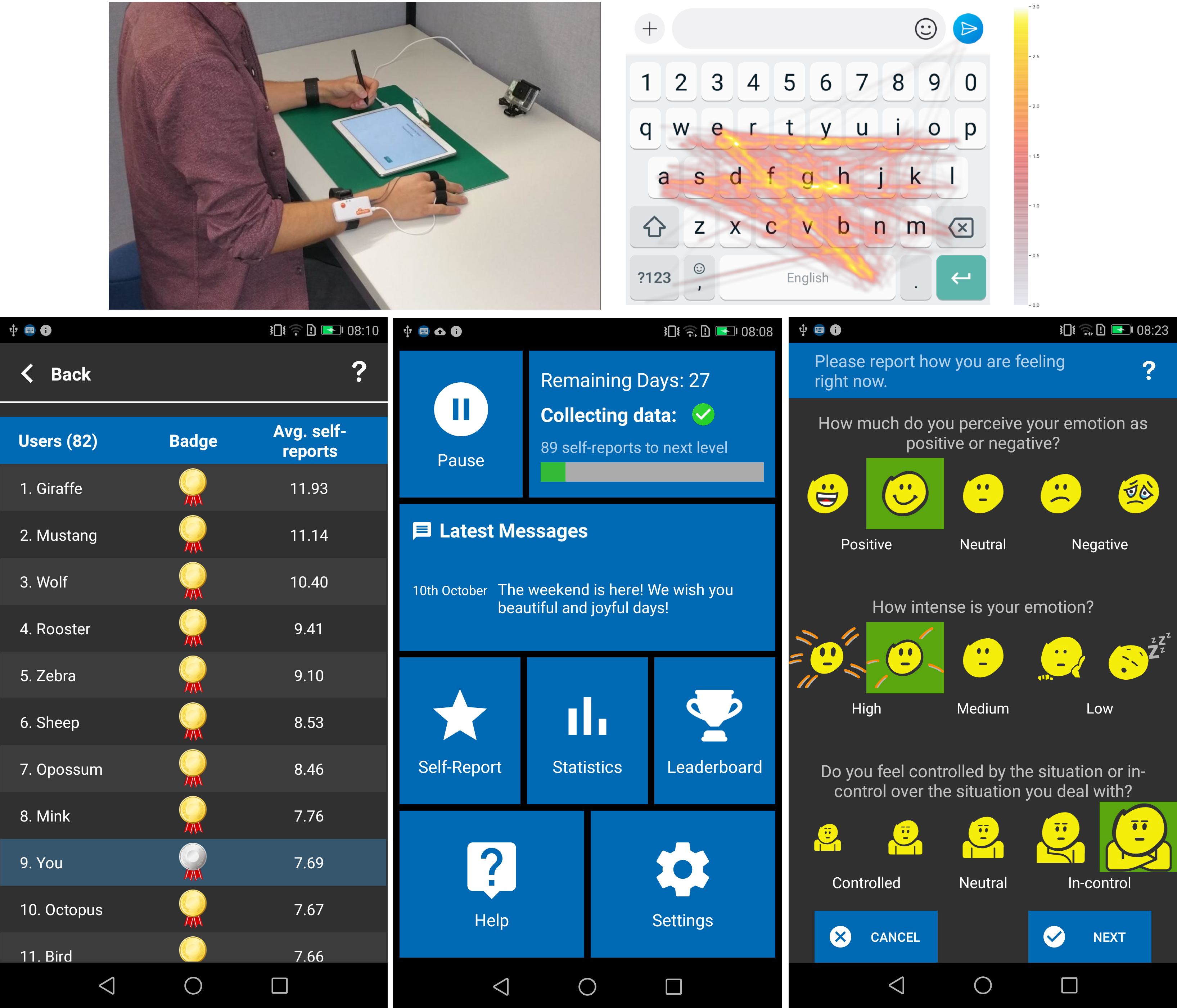

Gaining awareness of affective states enables leveraging emotional information as additional context in order to design emotionally sentient systems. Applications of such systems are manifold. For example, the learning gain can be increased in educational settings by incorporating targeted interventions that are capable of adjusting to affective states of students. Another application consists of enabling digital characters and smartphones to support enriched interactions that are sensitive to the user's contexts. In our projects we focus on data-driven models relying on lightweight data collection tailored to mobile settings. We have developed systems for affective state prediction based on camera recordings (i.e., action units, eye gaze, eye blinks, and head movement), low cost mobile biosensors (i.e., skin conductance, heart rate, and skin temperature), handwriting data, and smartphone touch and sensor data. We developed novel input representations (e.g., two-dimensional heat maps for encoding smartphone touch and sensor data) and deep learning networks (e.g., a semi-supervised deep learning pipeline based on a variational autoencoder). To evaluate our systems, we have collected large-scale datasets in the lab and in the wild encompassing tablet-based math-solving tasks, emotional stimuli from pictures, smartphone-based text conversations, and free interactions with smartphones over 10 weeks.

Publications

2023

Personality Trait Recognition Based on Smartphone Typing Characteristics in the Wild

IEEE Transactions on Affective Computing, IEEE, vol. 14, no. 4, 2023, pp. 3207-3217

Available files: [PDF][PDF suppl.] [BibTeX] [Abstract]

2022

Affective State Prediction from Smartphone Touch and Sensor Data in the Wild

SIGCHI Conference on Human Factors in Computing Systems (CHI '22) (New Orleans, Louisiana, USA, April 29 - May 5, 2022), pp. 1-14

Available files: [PDF][ZIP suppl.] [Video] [Video] [Video] [BibTeX] [Abstract]

2020

Image Reconstruction of Tablet Front Camera Recordings in Educational Settings

The 13th International Conference on Educational Data Mining (EDM) (Irfan, Marocco, July 10-13, 2020), pp. 245-256

Available files: [PDF] [Video] [BibTeX] [Abstract]

Glyph-Based Visualization of Affective States

EuroVis (Norrköping, Sweden, May 25-29, 2020), pp. 121-125

Available files: [PDF][ZIP suppl.] [Video] [BibTeX] [Abstract]

Affective State Prediction Based on Semi-Supervised Learning from Smartphone Touch Data

Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems (CHI '20) (Honolulu, Hawaii, April 25-30, 2020), pp. 377:1-13

Available files: [PDF][ZIP suppl.] [Video] [Video] [Video] [Video] [BibTeX] [Abstract]

2019

Affective State Prediction in a Mobile Setting using Wearable Biometric Sensors and Stylus

The 12th International Conference on Educational Data Mining (EDM) (Montreal, Canada, July 2-5, 2019), pp. 224-233

Available files: [PDF] [BibTeX] [Abstract]